Java Most Efficient Way to Read Csv File

- Download source files for .Net 2.0 - 540 KB

- Download binaries for .NET 2.0 - 23.8 KB

- Download Profiler information - v.09 KB

Of import update (2016-01-13)

First and foremost, I thank everyone that is contributing in the give-and-take forum of this commodity: it amazes me that users of this library are helping each other in this manner.

And so ... I never foresaw this projection getting so pop. Information technology was made with a single purpose in mind: performance and ane can clearly see that it afflicted its design, maybe too much in retrospect. Five years take passed since the last update and many people are asking where they can contribute lawmaking or post issues. I no longer maintain this library, but Paul Hatcher has set up a GitHub repository and a NuGet package. I invite you to go there if you need to maintain an existing projection that uses this library.

If however you are starting a new project or are willing to do and so some calorie-free refactoring, I also wrote a new library that includes a CSV and stock-still width reader/writer which are just as fast as this library, merely are much more than flexible and handle many more utilise cases. You can find the CSV reader source in the GitHub repository and download the NuGet package. I will fully maintain this new library and information technology is already used in many projects in production.

Introduction

One would imagine that parsing CSV files is a straightforward and slow task. I was thinking that too, until I had to parse several CSV files of a couple GB each. After trying to use the OLEDB JET driver and various Regular Expressions, I nevertheless ran into serious performance problems. At this point, I decided I would endeavour the custom form selection. I scoured the net for existing code, just finding a correct, fast, and efficient CSV parser and reader is not so simple, whatever platform/linguistic communication you fancy.

I say correct in the sense that many implementations simply use some splitting method similar String.Split(). This volition, patently, not handle field values with commas. Meliorate implementations may care nigh escaped quotes, trimming spaces before and later on fields, etc., but none I found were doing it all, and more than chiefly, in a fast and efficient manner.

And, this led to the CSV reader class I present in this article. Its design is based on the System.IO.StreamReader grade, and then is a non-buried, frontward-only reader (similar to what is sometimes called a burn down-hose cursor).

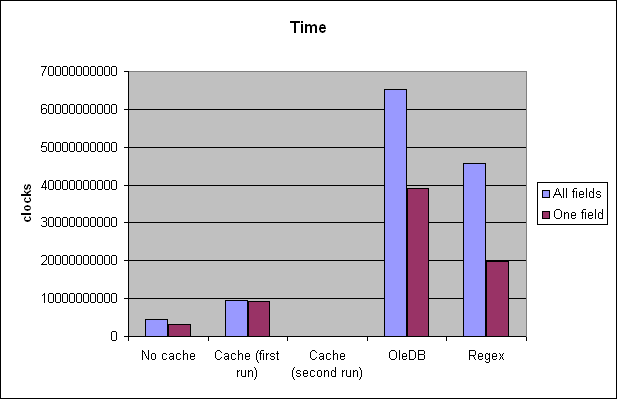

Benchmarking it against both OLEDB and regex methods, it performs about 15 times faster, and nonetheless its memory usage is very depression.

To requite more down-to-earth numbers, with a 45 MB CSV file containing 145 fields and 50,000 records, the reader was processing about 30 MB/sec. And so all in all, information technology took 1.5 seconds! The auto specs were P4 three.0 GHz, 1024 MB.

- Read about the latest updates here

Supported Features

This reader supports fields spanning multiple lines. The only restriction is that they must be quoted, otherwise it would not exist possible to distinguish between malformed data and multi-line values.

Basic information-binding is possible via the System.Data.IDataReader interface implemented by the reader.

You can specify custom values for these parameters:

- Default missing field activity;

- Default malformed CSV activeness;

- Buffer size;

- Field headers option;

- Trimming spaces option;

- Field delimiter character;

- Quote character;

- Escape character (can be the same as the quote character);

- Commented line character.

If the CSV contains field headers, they can be used to access a specific field.

When the CSV data appears to be malformed, the reader will fail fast and throw a meaningful exception stating where the fault occurred and providing the current content of the buffer.

A enshroud of the field values is kept for the current record only, only if you need dynamic access, I also included a buried version of the reader, CachedCsvReader, which internally stores records every bit they are read from the stream. Of class, using a cache this style makes the memory requirements style college, equally the full set of data is held in retentiveness.

Latest Updates (3.8.1 Release)

- Fixed bug with missing field treatment;

- Converted solution to VS 2010 (even so targets .NET 2.0)

Criterion and Profiling

Yous tin can notice the lawmaking for these benchmarks in the demo project. I tried to be fair and follow the same pattern for each parsing method. The regex used comes from Jeffrey Friedl'southward book, and tin be plant at page 271. It doesn't handle trimming and multi-line fields.

The test file contains 145 fields, and is well-nigh 45 MB (included in the demo project as a RAR archive).

I also included the raw data from the benchmark program and from the CLR Profiler for .Cyberspace ii.0.

Using the Lawmaking

The class design follows System.IO.StreamReader as much as possible. The parsing machinery introduced in version 2.0 is a chip trickier considering we handle the buffering and the new line parsing ourselves. However, because the task logic is conspicuously encapsulated, the flow is easier to sympathise. All the lawmaking is well documented and structured, but if yous have any questions, but postal service a comment.

Basic Usage Scenario

using System.IO; using LumenWorks.Framework.IO.Csv; void ReadCsv() { using (CsvReader csv = new CsvReader(new StreamReader(" data.csv"), truthful)) { int fieldCount = csv.FieldCount; string[] headers = csv.GetFieldHeaders(); while (csv.ReadNextRecord()) { for (int i = 0; i < fieldCount; i++) Console.Write(string.Format(" {0} = {ane};", headers[i], csv[i])); Console.WriteLine(); } } }

Simple Data-Binding Scenario (ASP.Net)

using System.IO; using LumenWorks.Framework.IO.Csv; void ReadCsv() { using (CsvReader csv = new CsvReader( new StreamReader(" data.csv"), truthful)) { myDataRepeater.DataSource = csv; myDataRepeater.DataBind(); } }

Complex Data-Bounden Scenario (ASP.Cyberspace)

Due to the way both the System.Web.UI.WebControls.DataGrid and System.Web.UI.WebControls.GridView handle System.ComponentModel.ITypedList, circuitous binding in ASP.Cyberspace is non possible. The only way around this limitation would be to wrap each field in a container implementing System.ComponentModel.ICustomTypeDescriptor.

Anyhow, even if information technology was possible, using the uncomplicated data-binding method is much more efficient.

For the curious amongst you lot, the bug comes from the fact that the two grid controls completely ignore the property descriptors returned by Organization.ComponentModel.ITypedList, and relies instead on Organization.ComponentModel.TypeDescriptor.GetProperties(...), which obviously returns the backdrop of the cord array and not our custom backdrop. Encounter System.Spider web.UI.WebControls.BoundColumn.OnDataBindColumn(...) in a disassembler.

Circuitous Data-Binding Scenario (Windows Forms)

using System.IO; using LumenWorks.Framework.IO.Csv; void ReadCsv() { using (CachedCsvReader csv = new CachedCsvReader(new StreamReader(" data.csv"), true)) { myDataGrid.DataSource = csv; } }

Custom Error Handling Scenario

using System.IO; using LumenWorks.Framework.IO.Csv; void ReadCsv() { using (CsvReader csv = new CsvReader( new StreamReader(" data.csv"), true)) { csv.MissingFieldAction = MissingFieldAction.ReplaceByNull; int fieldCount = csv.FieldCount; cord[] headers = csv.GetFieldHeaders(); while (csv.ReadNextRecord()) { for (int i = 0; i < fieldCount; i++) Console.Write(string.Format(" {0} = {1};", headers[i], csv[i] == aught ? " MISSING" : csv[i])); Console.WriteLine(); } } }

Custom Mistake Handling Using Events Scenario

using Organization.IO; using LumenWorks.Framework.IO.Csv; void ReadCsv() { using (CsvReader csv = new CsvReader( new StreamReader(" data.csv"), true)) { csv.DefaultParseErrorAction = ParseErrorAction.RaiseEvent; csv.ParseError += new ParseErrorEventHandler(csv_ParseError); int fieldCount = csv.FieldCount; string[] headers = csv.GetFieldHeaders(); while (csv.ReadNextRecord()) { for (int i = 0; i < fieldCount; i++) Console.Write(string.Format(" {0} = {i};", headers[i], csv[i])); Console.WriteLine(); } } } void csv_ParseError(object sender, ParseErrorEventArgs e) { if (due east.Error is MissingFieldCsvException) { Console.Write(" --MISSING FIELD Fault OCCURRED"); e.Activity = ParseErrorAction.AdvanceToNextLine; } }

History

Version iii.8.1 (2011-11-ten)

- Stock-still problems with missing field treatment.

- Converted solution to VS 2010 (still targets .Internet 2.0).

Version iii.8 (2011-07-05)

- Empty header names in CSV files are now replaced by a default name that tin exist customized via the new

DefaultHeaderNameproperty (by default, it is "Cavalcade" + column index).

Version iii.7.2 (2011-05-17)

- Stock-still a bug when handling missing fields.

- Strongly named the main associates.

Version 3.7.1 (2010-11-03)

- Stock-still a bug when handling whitespaces at the end of a file.

Version 3.7 (2010-03-30)

- Breaking: Added more field value trimming options.

Version three.half-dozen.2 (2008-10-09)

- Fixed a bug when calling

MoveToin a particular action sequence; - Fixed a bug when extra fields are nowadays in a multiline record;

- Stock-still a problems when there is a parse error while initializing.

Version iii.half dozen.1 (2008-07-16)

- Fixed a bug with

RecordEnumeratoracquired by reusing the aforementioned array over each iteration.

Version 3.vi (2008-07-09)

- Added a web demo projection;

- Fixed a bug when loading

CachedCsvReaderinto aDataTableand the CSV has no header.

Version 3.five (2007-11-28)

- Fixed a issues when initializing

CachedCsvReaderwithout having read a record offset.

Version 3.4 (2007-10-23)

- Stock-still a bug with the

IDataRecordimplementation whereGetValue/GetValuesshould renderDBNull.Valuewhen the field value is empty orcipher; - Fixed a bug where no exception is raised if a delimiter is non present after a non final quoted field;

- Fixed a bug when trimming unquoted fields and whitespaces bridge over 2 buffers.

Version three.three (2007-01-xiv)

- Added the option to turn off skipping empty lines via the property

SkipEmptyLines(on by default); - Fixed a issues with the handling of a delimiter at the terminate of a record preceded by a quoted field.

Version 3.two (2006-12-11)

- Slightly modified the fashion missing fields are handled;

- Fixed a bug where the call to

CsvReader.ReadNextRecord()would renderfalsefor a CSV file containing only 1 line catastrophe with a new line character and no header.

Version 3.1.two (2006-08-06)

- Updated dispose blueprint;

- Stock-still a issues when

SupportsMultilineisfalse; - Fixed a bug where the

IDataReaderschema cavalcade "DataType" returnedDbType.Stringinstead oftypeof(string).

Version 3.ane.1 (2006-07-25)

- Added a

SupportsMultilineproperty to help boost performance when multi-line support is not needed; - Added two new constructors to back up common scenarios;

- Added support for when the base stream returns a length of 0;

- Stock-still a bug when the

FieldCountbelongings is accessed before having read any record; - Fixed a bug when the delimiter is a whitespace;

- Fixed a bug in

ReadNextRecord(...)by eliminating its recursive behavior when initializing headers; - Fixed a bug when EOF is reached when reading the showtime record;

- Stock-still a bug where no exception would exist thrown if the reader has reached EOF and a field is missing.

Version 3.0 (2006-05-15)

- Introduced equal back up for .Internet 1.1 and .Cyberspace ii.0;

- Added extensive back up for malformed CSV files;

- Added complete back up for information-binding;

- Made available the electric current raw data;

- Field headers are now accessed via an array (breaking change);

- Fabricated field headers example insensitive (cheers to Marco Dissel for the suggestion);

- Relaxed restrictions when the reader has been disposed;

-

CsvReadersupports 2^63 records; - Added more than test coverage;

- Upgraded to .Cyberspace 2.0 release version;

- Fixed an issue when accessing sure properties without having read any data (notably

FieldHeaders).

Version 2.0 (2005-08-10)

- Ported code to .Internet two.0 (July 2005 CTP);

- Thoroughly debugged via extensive unit testing (special thanks to shriop);

- Improved speed (now 15 times faster than OLEDB);

- Consumes one-half the retentiveness than version 1.0;

- Tin specify a custom buffer size;

- Full Unicode support;

- Motorcar-detects line ending, exist information technology \r, \n, or \r\northward;

- Better exception handling;

- Supports the "field1\rfield2\rfield3\n" pattern (used by Unix);

- Parsing code completely refactored, resulting in much cleaner code.

Version 1.i (2005-01-fifteen)

- 1.one: Added back up for multi-line fields.

Version i.0 (2005-01-09)

- 1.0: First release.

This article, forth with any associated source code and files, is licensed under The MIT License

Source: https://www.codeproject.com/Articles/9258/A-Fast-CSV-Reader

0 Response to "Java Most Efficient Way to Read Csv File"

Enviar um comentário